All good things (opensolaris) must come to an end

This article was posted by Matty on 2011-01-09 17:59:00 -0400 -0400

This past weekend I unsubscribed from my last opensolaris mailing list. While reflecting on where technology is heading, I had to take a few minutes to reflect on where things were just a few years back. I remember vividly the day that the opensolaris.org website came online. After the announcement came out, I spent 24 straight hours signing up for mailing lists, reading documentation and reviewing the source code for a number of utilities. This had been all too easy with Linux, since all of the code and documentation was available in the public domain. But when the Solaris source came online, I felt like a 4-year old in a HUGE candy store.

Over the next few months I saw the community start to grow at a decent pace. The first opensolaris books (OpenSolaris Bible and Pro Opensolaris) were published, Solaris internals was updated to take Solaris 10 and opensolaris into account and every major trade magazine was writing something about opensolaris. Additionally, our local OpenSolaris users group was starting to grow in size, and I was beginning to make a number of good friends in the community. All of these things got me crazy excited about the opensolaris community, and I wanted to jump in and start helping out any way I could.

After pondering all of the things I wanted in opensolaris, I came up with a simple change that would allow me to get familiar with the development model. The change I proposed and coded up would allow dd to print the status of the copy operation if a SIGUSR1 signal was received. This feature was available on my FreeBSD and Linux boxes, so I wanted to see it on my Solaris hosts as well. I went through the hassle of filling out a form to submit code and then I sent my changes over to my sponsor. He replied stating that he would look things over and get back to me. That was the last time I heard from him and my follow up e-mail didn’t receive a response either.

I am not the type of person to pester someone to do something, so I didn’t sent another e-mail to request status. This of course let to my proposal dying a silent death. :( This was the first thing that led me to wonder if opensolaris would truly flourish, since all of the source code enhancements I had made to other projects were added back within days (and usually the authors were grateful). Since I knew contributing code was most likely not going to work, I decided to be active on the forums and propose changes that would better Solaris. This is when I started to get the impression that most of the design and development was happening behind closed doors, and not out in the open. Linux has prided itself on openness when it comes to design and development, so once again I started to question whether opensolaris would flourish.

So fast forward to the recent announcement by Oracle that opensolaris design and development would not be happening out in the open. In my opinion this never really occurred in the past, so I wasn’t one bit surprised by this announcement. They want to capitalize on the product (Solaris) they bought, and I can’t really fault them for that. Some people appear to have been caught off guard by this announcement, but the second Oracle bought Sun I figured open development would most likely stop. My only remaining question was what would happen to Solaris? Will Oracle eventually scrap it in favor of Linux? The cost to support two operating systems has to be relatively large, and I have to assume that there are some folks at Oracle who are evaluating this.

The Oracle announcement appears to have stirred some things up, and a number of new things came about as a result of it. The Illumos project was erected with it’s goal of making opensolaris development open. While this is a great idea in theory, I’m skeptical that the project can truly succeed without Sun/Oracle engineering. The amount of code in Opensolaris is rather large, and I have to assume that you would need an army of engineers to design, develop and QA everything to make it battle ready. I truly wish this project the best, and hope it gets the momentum it needs to succeed (Garrett D’Amore is a sharp dude, so the source is definitely in good hands!).

About a year ago I ditched Solaris in favor of Redhat Linux, which appears to be a growing trend amongst my SysAdmin friends. I like that Linux development is truly open, and the distributions I use (RHEL, CentOS and Fedora) provide the source code to the entire Operating System. The Linux distributions I use also have a large number of users, so getting answers to support or configuration issues is typically pretty easy to do. There is also the fact that the source is available, so I can support myself if no one happens to know why something is behaving a specific way.

This post wasn’t meant to diss Solaris, OpenSolaris or Illuminos. I was purely reflecting on the road I’ve traveled prior to embracing Linux and giving up hope in the opensolaris community. Hopefully one day Oracle will make all of the awesome Solaris features (DTrace, ZFS, Zones, Crossbow, FMA) available to the Linux community by slapping a GPLv2 license on the source code. I would love nothing more that to have all of the things I love about Linux merged with the things I love about Solaris. This would be a true panacea as far as Operating Systems go! :)

Getting started with Windows Server 2008

This article was posted by Matty on 2011-01-09 12:14:00 -0400 -0400

This past year I finally took the plunge and started learning Windows Server. At first I was extremely apprehensive about this, but as I thought about it more I realized that a lot of companies are successfully using Windows Server for one or more reasons. In addition, most environments have a mix of Windows, Linux and Solaris, so this would help me understand all the pieces in the environment I support.

Being the scientific person I am, I decided to do some research to see just how viable Windows Server was. To begin my experiements, I picked up a copy of Windows Server 2008 Inside Out. This was a fantastic book, and really set the ground work for how Windows, active directory and the various network services work. The book peaked my attention, and my true geek came out and I committed myself to learning more.

To go into more detail, I signed up for a number of Microsoft training courses. The first class I took was Maintaining a Microsoft SQL Server 2005 database. This course helped out quite a bit, and provided some immediate value when I needed to do some work on my VMWare virtual center database (VMWare virtual center uses SQL server as it’s back-end database).

After my SQL skills were honed by reading and hands on practice, I signed up for Configuring and troubleshooting a Windows Server 2008 network infrastructure and configuring and troubleshooting Windows Server 2008 active directory servers. These classes were pretty good, and went into a lot of detail on the inner workings of active directory, DNS, WINS, DHCP, DFS, role management, group policy as well as a bunch of other items related to security and user management.

While the courses were useful, I wouldn’t have attended them if I had to pay for them out of my own pocket (my employer covered the cost of the classes). I’m pretty certain I could have learned just as much by reading Windows Server 2008 Inside Out, Active Directory: Designing, Deploying, and Running Active Directory and spending a bunch of time experimenting with each service (this is the best way to learn, right?).

That said, I’m planning to get Microsoft certified this year. That is probably the biggest reason to take the classes, since they lay out the material in a single location. I haven’t used any of the Microsoft certification books, but I suspect they would help you pass the tests without dropping a lot of loot on the courses. Once I take and pass all of the tests I’ll make sure to update this section with additional detail.

If you’ve been involved with Windows Server, I’d love to hear how you got started. I’m hoping to write about some of the Windows-related stuff I’ve been doing, especially the stuff related to getting my Linux hosts to work in an active directory environment. Microsoft and Windows server are here for the foreseeable future, so I’m planning to understand their pros and cons and use them where I see a good fit. There are things Solaris and Linux do better than Windows Server, and things Windows Server does better than Linux and Solaris. Embracing the right tool (even if it is a Microsoft product) for the job is the sign of a top notch admin in my book. Getting myself to think that way took a LOT of work. ;)

A nice graphical interface for smartmontools

This article was posted by Matty on 2011-01-07 10:59:00 -0400 -0400

In my article out SMART your hard drive, I discussed smartmontools and the smartctl comand line utility in detail. The article shows how to view SMART data on a hard drive, conduct self-tests and shows how to configure smartmontools to generate alerts when a drive is about to or has failed. Recently I learned about GSmartControl, which is a graphical front-end to smartmontools. While I’ve only played with it a bit, it looks like a pretty solid piece of software! The project website has a number of screenshots, and you can download the source from the here. Nice!

A simple and easy way to copy a file system between two Linux servers

This article was posted by Matty on 2011-01-01 23:14:00 -0400 -0400

During my tenure as a SysAdmin, I can’t recall how many times I’ve needed to duplicate the contents of a file systems between systems. I’ve used a variety of solutions to do this, including array-based replication, database replication and tools such as rsync and tar piped to tar over SSH. When rsync and tar where the right tool, I often asked myself why there wasn’t a generic file system replication tool when I completed my work. Well, it appears there is. The cpdup utility provides an easy to use interface to copy a file system from one system to another. In it’s most basic form you can call cpdup with a source and destination file system:

$ cpdup -C -vv -d -I /data 192.168.56.3:/data

root@192.168.56.3's password:

Handshaked with fedora2

/data

Scanning /data ...

Scanning /data/conf ...

/data/conf/main.cf.conf copy-ok

/data/conf/smb.conf copy-ok

/data/conf/named.conf copy-ok

Scanning /data/www ...

Scanning /data/www/content ...

Scanning /data/www/cgi-bin ...

Scanning /data/dns ...

/data/lost+found

Scanning /data/lost+found ...

cpdup completed successfully

1955847 bytes source, 1955847 src bytes read, 0 tgt bytes read

1955847 bytes written (1.0X speedup)

3 source items, 8 items copied, 0 items linked, 0 things deleted

3.8 seconds 1007 Kbytes/sec synced 503 Kbytes/sec scanned

This will cause the entire contents of /data to be migrated through SSH to /data on the remote server. It appears cpdup picks up everything including devices and special files, so this would be a great utility to clone systems. There are also options to replace files that are on the remote end, remove files that are no longer on the source, and various others that can be used to customize the copy operation. Nifty utility, and definitely one I’ll be adding to my utility belt.

The easiest way to test the memory in your Intel-based PC!

This article was posted by Matty on 2010-12-29 20:31:00 -0400 -0400

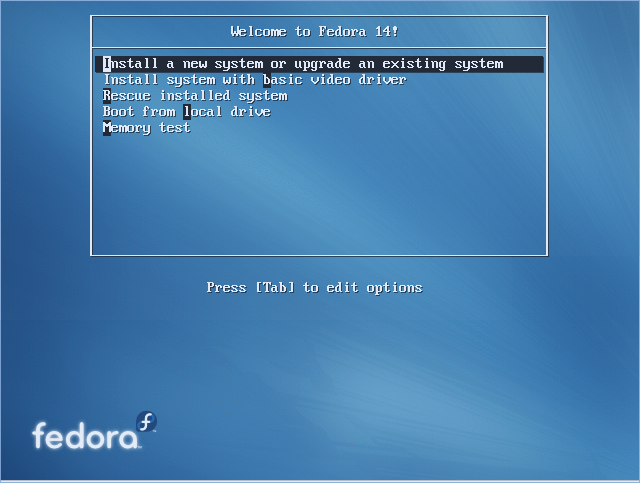

Most admins have a series of tools they use to check for faulty hardware. This toolkit most likely includes the ultimate boot disk, a network accessible memtest and preclear_disk.sh on a USB stick. I was always curious why Linux disitrubtions didn’t integrate these items into their install / live CDs, since it would make debugging flakey hardware a whole lot easier. Well, I was pleasantly surprised this week when I booted the Fedora 14 installation DVD and saw the following screen:

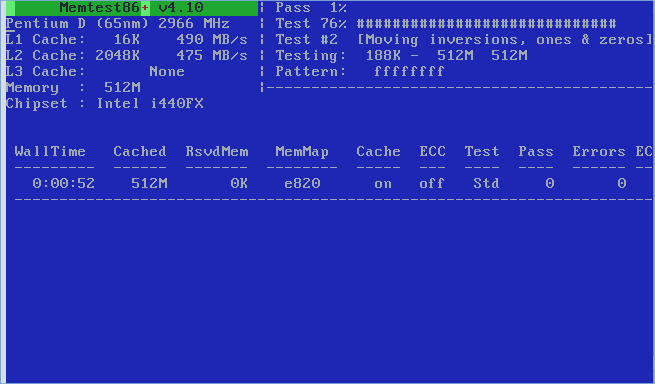

Once I selected the memory test option I was thrown directly into memtest:

This is solid, since one CD can now be used to test the memory in a server and repair things that go south. The Fedora 14 CD can be downloaded from the Fedora project website, and it’s definitely something that every Fedora admin should burn and store in a readily accessible location.