Concert review Guns N' Roses with special guest Buckcherry

This article was posted by Matty on 2011-11-03 20:19:00 -0400 -0400

If you’ve seen any of the concert reviews I’ve done in the past you would know that music is one of the things I love. I try to see as many shows as possible, and am constantly mixing up my musical genres. One day you will catch me listening to Alice In Chains, the next day I’ll be listening to Toby Keith and the day after that I’ll throw on some techno. Of all of the bands I’ve listened to, Guns N’ Roses has probably received the most airplay on my home stereo (I have all of their albums, but Appetite For Destruction has definitely gotten the most airplay).

It seems like just yesterday I was sitting in my parents recliner watching MTV (back when they had music videos). The opening riff to Welcome To The Jungle came on, and I still remember the scene where Axel gets off the bus and heads out into the city. That was a pivotal point in my life, and I became one of the most hard core GNR fans from then on. So fast forward to this week. The new GNR (Axel Rose w/ an all new supporting cast) came to town this week, and I was fortunate to get tickets to the show.

A local band started the night off, though I didn’t catch their name. Buckcherry was next, and they played an amazing show that included “Sorry,” “Crazy Bitch” and “Everything” to name a few. Josh Todd is an amazing lead singer, and he has a phenomenal stage presence. I commented to my girlfriend during their performance that very few bands have a solid presence on stage, and they have no idea how to get people into the show. IMHO that is one of the reasons you spend big bucks to come out and see a band.

Once Josh and the boys finished up we got to sit around for close to two hours while they prepared the stage for GNR. The lights eventually went dim, and the band members came on stage to the theme song of the tv series Dexter (I wonder if Showtime can ever top Dexter season 4). They then cut into into their first song of the night, Chinese Democracy, which is fresh off their relatively new album of the same name. Once they finished Chinese Democracy the crowd got something they had all been waiting for, the opening riff to Welcome To The Jungle. Everybody and their brother knows the intro, and Axel and boys played a killer version of it. Awesome!

For a band that had been out of commission for years, I was amazed at how sharp Axel was. He was dancing around, it looked like he was having fun, and his interaction with his bandmates looked solid. He kept running off the stage when he wasn’t singing, and I kept asking myself “Is Axel OK?". Based on his performance and the fact that they didn’t stop the show, I think Axel was A-OK.

Now on to the setlist they played. They performed almost (no Civil War) every hit you can think of, including “Welcome To The Jungle,” “Nightrain,” “Live And Let Die,” “November Rain,” “Rocket Queen,” “Sweet Child Of Mine,” “Patience,” “Knocking On Heavens Door,” “It’s So Easy,” “Mr. Brownstone,” “Don’t Cry” and the fricking awesome rendition of “Paradise City.” You can see the full set list here.

So how would I rate this experience? Well I have to give this experience ten stars out of ten stars. The music sounded amazing, the band played for 3 hours (the concert didn’t let out until 2am), Axel was in good spirits and I got to hear a bunch of my favorite songs performed live by the original lead singer. The only thing that would have made this experience better would be to have had the original GNR members (Slash, Duff, etc.) take part in the festivities. Good times my peeps!

Using rpcdebug to debug Linux NFS client and server issues

This article was posted by Matty on 2011-11-02 08:31:00 -0400 -0400

Debugging NFS issues can sometimes be a chore, especially when you are dealing with busy NFS servers. Tools like nfswatch and nfsstat can help you understand what operations your server is servicing, but sometimes you need to get into the protocol guts to find out more info. There are a couple of ways you can do this. First you can capture NFS traffic with tcpdump and use the NFS protocol decoding features that are built into wireshark. This will allow you to see the NFS traffic that is going over the network, which in most cases is enough to solve a given caper.

Alternatively, you can use the rpcdebug utility to log additional debugging data on a Linux NFS client or server. The debugging data includes information on the RPC module, the lock manager module and the NFS client and server modules. The debugging flags you can set inside these modules can be displayed with the rcpdebug “-vh” option:

$ rpcdebug -vh

usage: rpcdebug [-v] [-h] [-m module] [-s flags...|-c flags...]

set or cancel debug flags.

Module Valid flags

rpc xprt call debug nfs auth bind sched trans svcsock svcdsp misc cache all

nfs vfs dircache lookupcache pagecache proc xdr file root callback client mount all

nfsd sock fh export svc proc fileop auth repcache xdr lockd all

nlm svc client clntlock svclock monitor clntsubs svcsubs hostcache xdr all

To enable a specific set of flags you can pass the name of a module and the flags to enable to rpcdebug:

$ rpcdebug -m nfsd -s proc

nfsd proc

In the example above I told the nfsd module to log debugging data about the RPC procedures that were received. If I hop on an NFS client and write 512-bytes to a file I would see the NFS procedures that were issued in the server’s messages file:

Nov 1 09:53:18 zippy kernel: nfsd: LOOKUP(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 foo1

Nov 1 09:53:18 zippy kernel: nfsd: CREATE(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 foo1

Nov 1 09:53:18 zippy kernel: nfsd: WRITE(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 512 bytes at 0

Nov 1 09:53:18 zippy kernel: nfsd: COMMIT(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 0@0

To log ALL of the nfsd debugging data possible, you can set flags to all. This will enable all of the flags in the module which will cause an excessive amount of data to be logged (don’t do this on production servers unless you have a specific need to do so!). Here is the output you would see from a single 512-byte write when all of the flags are enabled:

Nov 1 09:56:18 zippy kernel: nfsd_dispatch: vers 3 proc 4

Nov 1 09:56:18 zippy kernel: nfsd: ACCESS(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 0x1f

Nov 1 09:56:18 zippy kernel: nfsd: fh_verify(36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3)

Nov 1 09:56:18 zippy kernel: nfsd_dispatch: vers 3 proc 1

Nov 1 09:56:18 zippy kernel: nfsd: GETATTR(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3

Nov 1 09:56:18 zippy kernel: nfsd: fh_verify(36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3)

Nov 1 09:56:18 zippy kernel: nfsd_dispatch: vers 3 proc 4

Nov 1 09:56:18 zippy kernel: nfsd: ACCESS(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 0x2d

Nov 1 09:56:18 zippy kernel: nfsd: fh_verify(36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3)

Nov 1 09:56:18 zippy kernel: nfsd_dispatch: vers 3 proc 2

Nov 1 09:56:18 zippy kernel: nfsd: SETATTR(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3

Nov 1 09:56:18 zippy kernel: nfsd: fh_verify(36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3)

Nov 1 09:56:18 zippy kernel: nfsd_dispatch: vers 3 proc 7

Nov 1 09:56:18 zippy kernel: nfsd: WRITE(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 512 bytes at 0

Nov 1 09:56:18 zippy kernel: nfsd: fh_verify(36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3)

Nov 1 09:56:18 zippy kernel: nfsd: write complete host_err=512

Nov 1 09:56:18 zippy kernel: nfsd_dispatch: vers 3 proc 21

Nov 1 09:56:18 zippy kernel: nfsd: COMMIT(3) 36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3 0@0

Nov 1 09:56:18 zippy kernel: nfsd: fh_verify(36: 01070001 0003fe95 00000000 ec426dec 9f402ff3 d2aac8b3)

Now you may be asking yourself what is that stuff following the procedure name? The data logged along with the procedure name contains the contents of the data structures sent as part of a given procedure. You can view the data structures and their members by opening up the correct NFS RFC and checking the argument list. Here is what the NFSv3 RFC says about the argument passed to the WRITE procedure:

struct WRITE3args {

nfs_fh3 file;

offset3 offset;

count3 count;

stable_how stable;

opaque data<>;

};

The structure contains the file handle to perform the operation on, the offset to perform the write and the number of bytes to write. It also contains a stable flag to tell the server it should COMMIT the data prior to returning a success code to the client. The last field contains the data itself.

Once you have a better understanding of the fields passed to a procedure you can cross reference the lines in the messages file with the dprintk() statements in the kernel. Here is the kernel source for the dprintk() statement in the VFS WRITE code:

dprintk("nfsd: WRITE %s %d bytes at %d\n",

SVCFH_fmt(&argp->fh),

argp->len, argp->offset);

This matches up exactly with the contents in the log file, and the dprintk() code shows the order everything is logged. This is useful stuff, though it’s probably not something you will need to access daily. ;) I’m documenting it here so I can recall this procedure down the road.

Using wireshark's protocol decoding to debug NFS problems

This article was posted by Matty on 2011-11-01 19:48:00 -0400 -0400

Most admins have probobably encountered a situation where someone says “hey this really bizarre thing <fill_in_the_blank> is occurring.” Whenever I am approached to look at these types of issues I will typically start by jumping on my systems and reviewing system, network and performance data. Once I’ve verified those are within normal levels I will begin reviewing the client server communications to see what is going on.

I’ve encountered some NFS issues that fit into the “bizarre” category, and it’s amazing how much information you can glean by reviewing the NFS traffic between the client and server. I like to visualize problems, so I will frequently grab the network traffic with tcpdump:

$ tcpdump -w /var/tmp/nfs.dmp -i eth0 host 192.168.56.102 and not port 22

Re-create the issue and then feed the resulting dump into wireshark:

$ wireshark nfs.dmp

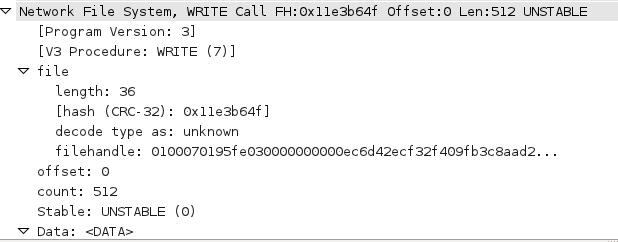

Wireshark will decode all of the NFS procedures for you, which makes comparing the captured packet against the pertinent RFC super super easy. The following picture shows how wireshark decodes an NFS WRITE request from a client:

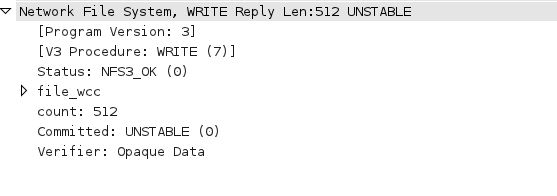

And the response sent back from the server:

I’m not sure how NFS debugging can get any easier, and the capabilities built into wireshark are absolutely amazing! I reach for it weekly, and if you need to debug NFS protocol issues wireshark will soon become your best friend!

Dealing with xauth error in locking authority file errors

This article was posted by Matty on 2011-11-01 17:29:00 -0400 -0400

I recently logged into one of my servers and received the following error:

$ ssh foo

matty@foo's password:

Last login: Tue Nov 1 13:42:52 2011 from 10.10.56.100

/usr/bin/xauth: error in locking authority file /home/matty/.Xauthority

I haven’t seen this one before, but based on previous “locking issues” I’ve encountered in the past I ran strace against xauth to see if an old lock file existed:

$ strace xauth

In the output I saw the following which confirmed my suspicion:

open("/home/matty/.Xauthority-c", O_WRONLY|O_CREAT|O_EXCL, 0600) = -1 EEXIST (File exists)

A quick check with ls revealed the same thing:

$ ls -l /home/lnx/matty/.Xauthority

-rw------- 2 matty SysAdmins 0 Nov 1 13:42 /home/lnx/matty/.Xauthority-c

-rw------- 2 matty SysAdmins 0 Nov 1 13:42 /home/lnx/matty/.Xauthority-l

I removed both of the lock files and haven’t seen the error again. Thought I would pass this on in case someone else encountered this issue.

Displaying CPU temperatures on Linux hosts

This article was posted by Matty on 2011-10-29 07:35:00 -0400 -0400

Intel and AMD keep coming out with bigger and faster CPUs. Each time I upgrade (I’m currently eyeing one of these) to a newer CPU it seems like the heat sinks and cooling fans have tripled in size (I ran across this first hand when I purchased a Zalman CPU cooler last year). If you use Linux and a relatively recent motherboard, there should be a set of sensors on the motherboard that you can retrieve the current temperatures from. To access these sensors you will first need to install the lm_sensors package:

$ yum install lm_sensors

Once the software is installed and configured for your hardware you can run the sensors tool to display the current temperatures:

$ sensors

k8temp-pci-00c3

Adapter: PCI adapter

Core0 Temp:

+14°C

Core1 Temp:

+14°C

This is useful information, especially if you are encountering unexplained reboots. Elevated temperatures can lead to all sorts of issues, and lm_sensors is a great tool for helping to isolate these types of problems. Now back to drooling over the latest generation of processors from Intel and AMD. :)