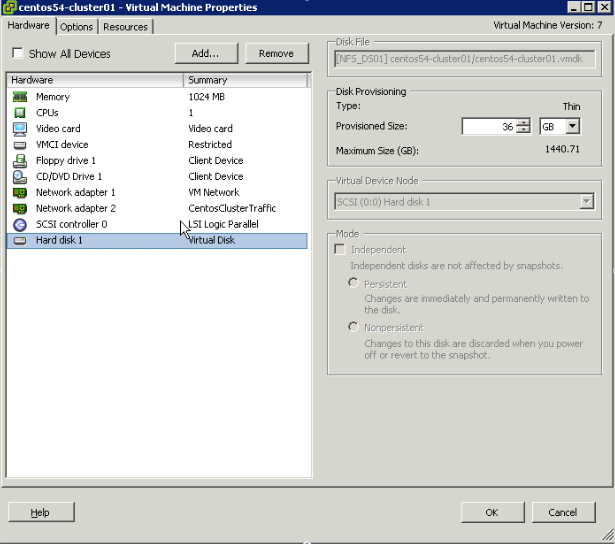

One of my production vSphere virtual machines came close to running out of space this past week. Expanding guest storage with vSphere is a breeze, and I wanted to jot down my notes in this blog post. To expand the size of a virtual disk, you will need to open the virtual infrastructure client, right click your guest and click “Edit Settings”. Once the virtual machine settings window opens, you will need to navigate to the disk that you want to expand. On the right side of the screen, you can click in the “Provisioned Size” text box and specify the new size of the virtual disk:

Now, assuming this is a Linux host, you can run fdisk to see the current size of the disk (replace /dev/sdb with the device that corresponds to the virtual disk you expanded):

$ fdisk -l /dev/sdb /dev/sdb1 /dev/sdb9

Disk /dev/sdb: 19.3 GB, 19327352832 bytes

255 heads, 63 sectors/track, 2349 cylinders

Units = cylinders of 16065 512 = 8225280 bytes

Disk /dev/sdb doesn't contain a valid partition table

Fdisk still shows the disk as 18GB in size, so we will need to rescan the SCSI bus to pick up the changes:

$ /usr/bin/rescan-scsi-bus.sh -r -i

Host adapter 0 (mptspi) found.

Scanning SCSI subsystem for new devices

and remove devices that have disappeared

Scanning host 0 for SCSI target IDs 0 1 2 3 4 5 6 7, all LUNs

Scanning for device 0 0 0 0 ...

OLD: Host: scsi0 Channel: 00 Id: 00 Lun: 00

Vendor: VMware Model: Virtual disk Rev: 1.0

Type: Direct-Access ANSI SCSI revision: 02

Scanning for device 0 0 1 0 ...

OLD: Host: scsi0 Channel: 00 Id: 01 Lun: 00

Vendor: VMware Model: Virtual disk Rev: 1.0

Type: Direct-Access ANSI SCSI revision: 02

0 new device(s) found.

0 device(s) removed.

If the rescan completes without issue, the new space should be available:

$ fdisk -l /dev/sdb /dev/sdb1 /dev/sdb9

Disk /dev/sdb: 38.6 GB, 38654705664 bytes

255 heads, 63 sectors/track, 4699 cylinders

Units = cylinders of 16065 512 = 8225280 bytes

Disk /dev/sdb doesn't contain a valid partition table

This is rad, though the physical volume, logical volume and file system know nothing about the new space. To expand the physical volume to make use of the space, we can run pvresize:

$ pvresize -v /dev/sdb /dev/sdb1 /dev/sdb9

Using physical volume(s) on command line

Archiving volume group "data_vg" metadata (seqno 4).

No change to size of physical volume /dev/sdb.

Resizing volume "/dev/sdb" to 75497088 sectors.

Updating physical volume "/dev/sdb"

Creating volume group backup "/etc/lvm/backup/data_vg" (seqno 5).

Physical volume "/dev/sdb" changed

1 physical volume(s) resized / 0 physical volume(s) not resized

$ pvdisplay /dev/sdb /dev/sdb1 /dev/sdb9

--- Physical volume ---

PV Name /dev/sdb

VG Name data_vg

PV Size 36.00 GB / not usable 3.81 MB

Allocatable yes (but full)

PE Size (KByte) 4096

Total PE 9215

Free PE 0

Allocated PE 9215

PV UUID dNSA1X-KxHX-g0kq-ArpY-umVO-buN3-NrPoAt

As you can see above, the physical volume is now 36GB in size. To expand the logical volume that utilizes this physical volume, we can run lvextend with the physical extent values listed in the pvdisplay output:

$ lvextend -l 9215 /dev/data_vg/datavol01

Extending logical volume datavol01 to 36.00 GB

Logical volume datavol01 successfully resized

$ lvdisplay /dev/data_vg/datavol01

--- Logical volume ---

LV Name /dev/data_vg/datavol01

VG Name data_vg

LV UUID E2RB58-4Vac-tKSA-vmnW-nqL5-Sr0N-IR4lum

LV Write Access read/write

LV Status available

# open 0

LV Size 36.00 GB

Current LE 9215

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:3

Sweet! Now that the logical volume is 36GB in size, we can use the resize2fs command to increase the size of the ext3 file system that resides on the logical volume:

$ resize2fs /dev/data_vg/datavol01

resize2fs 1.39 (29-May-2006)

Filesystem at /dev/data_vg/datavol01 is mounted on /datavol01; on-line resizing required

Performing an on-line resize of /dev/data_vg/datavol01 to 9436160 (4k) blocks.

The resize2fs utility will take a few minutes to run, and should tell you that it is increasing the size of the file system to some number of blocks. If this step completes without error, you should see the new space in the df output (this assumes the file system is mounted):

$ df -h /datavol01

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/data_vg-datavol01

36G 17G 18G 49% /datavol01

Using the steps listed above, I was able to double the size the virtual disk attached to my virtual machine. While this procedure should work flawlessly, I provide zero guarantees that it will. Use this procedure at your own risk, and make sure to test it out in a non-production environment before doing ANYTHING with your production hosts!! I really really dig vSphere, and hope to blog about it a ton in the near future.